环境:

# 采用NFS存储卷的方式 持久化存储mysql数据目录

需求:

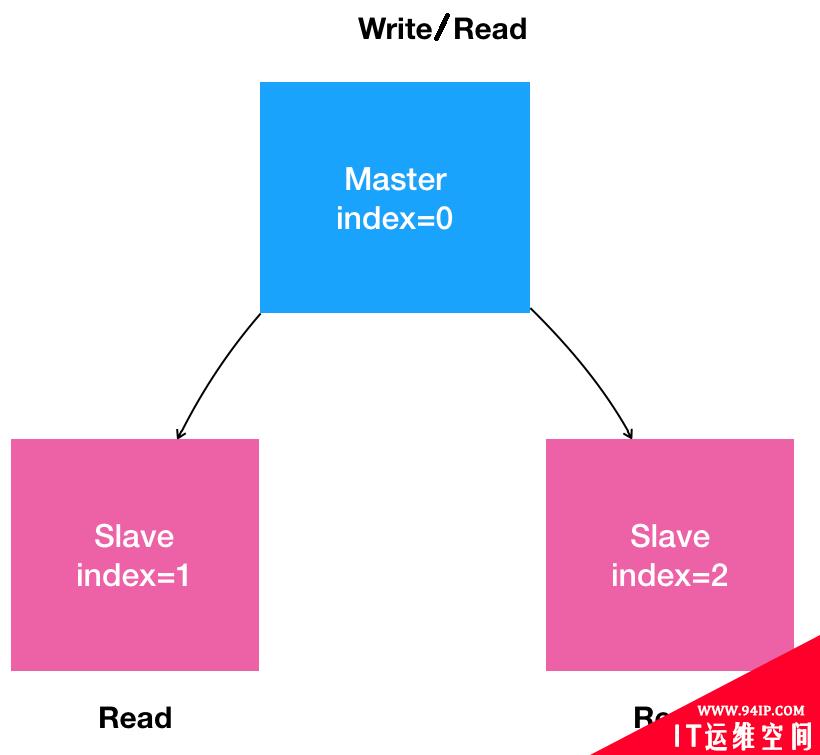

展示如何使用 StatefulSet 控制器运行一个有状态的应用程序。此例是多副本的 MySQL 数据库。 示例应用的拓扑结构有一个主服务器和多个副本,使用异步的基于行(Row-Based) 的数据复制。

- 搭建一个“主从复制”(Maser-Slave Replication)的 MySQL 集群

- 存在一个主节点【master】,有多个从节点【slave】

- 从节点可以水平拓展

- 所有的写操作,只能在主节点上执行

- 读操作可以在所有节点上执行

一、部署NFS服务器

#服务器安装nfs服务,提供nfs存储功能 1、安装nfs-utils yuminstallnfs-utils(centos) 或者apt-getinstallnfs-kernel-server(ubuntu) 2、启动服务 systemctlenablenfs-server systemctlstartnfs-server 3、创建共享目录完成共享配置 mkdir/home/nfs#创建共享目录 4、编辑共享配置 vim/etc/exports #语法格式:共享文件路径客户机地址(权限)#这里的客户机地址可以是IP,网段,域名,也可以是任意* /home/nfs*(rw,async,no_root_squash)

#服务器安装nfs服务,提供nfs存储功能 1、安装nfs-utils yuminstallnfs-utils(centos) 或者apt-getinstallnfs-kernel-server(ubuntu) 2、启动服务 systemctlenablenfs-server systemctlstartnfs-server 3、创建共享目录完成共享配置 mkdir/home/nfs#创建共享目录 4、编辑共享配置 vim/etc/exports #语法格式:共享文件路径客户机地址(权限)#这里的客户机地址可以是IP,网段,域名,也可以是任意* /home/nfs*(rw,async,no_root_squash)

服务自检命令 exportfs-arv 5、重启服务 systemctlrestartnfs-server 6、本机查看nfs共享目录 #showmount-e服务器IP地址(如果提示命令不存在,则需要yuminstallshowmount) showmount-e127.0.0.1 /home/nfs* 7、客户端模拟挂载[所有k8s的节点都需要安装客户端] [root@master-1~]#yuminstallnfs-utils(centos) 或者apt-getinstallnfs-common(ubuntu) [root@master-1~]#mkdir/test [root@master-1~]#mount-tnfs172.16.201.209:/home/nfs/test #取消挂载 [root@master-1~]#umount/test

二、配置PV 动态供给(NFS StorageClass),创建pvc

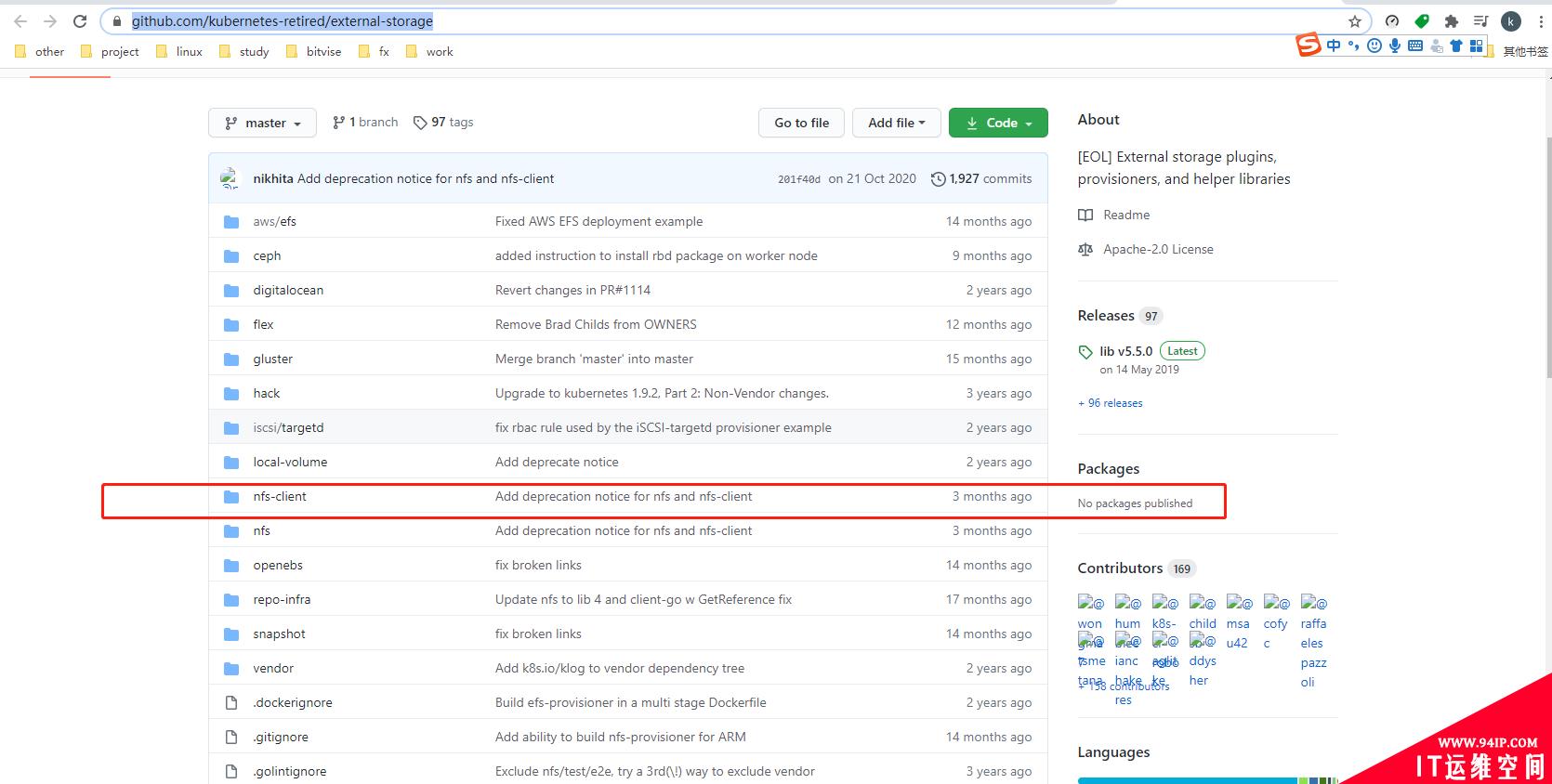

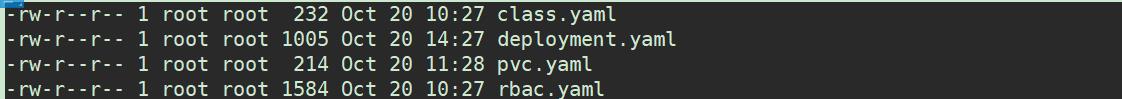

部署NFS实现自动创建PV插件: 一共设计到4个yaml 文件 ,官方的文档有详细的说明

https://github.com/kubernetes-incubator/external-storage

root@k8s-master1:~#mkdir/root/pvc root@k8s-master1:~#cd/root/pvc

创建rbac.yaml 文件

root@k8s-master1:pvc#catrbac.yaml kind:ServiceAccount apiVersion:v1 metadata: name:nfs-client-provisioner --- kind:ClusterRole apiVersion:rbac.authorization.k8s.io/v1 metadata: name:nfs-client-provisioner-runner rules: -apiGroups:[""] resources:["persistentvolumes"] verbs:["get","list","watch","create","delete"] -apiGroups:[""] resources:["persistentvolumeclaims"] verbs:["get","list","watch","update"] -apiGroups:["storage.k8s.io"] resources:["storageclasses"] verbs:["get","list","watch"] -apiGroups:[""] resources:["events"] verbs:["create","update","patch"] --- kind:ClusterRoleBinding apiVersion:rbac.authorization.k8s.io/v1 metadata: name:run-nfs-client-provisioner subjects: -kind:ServiceAccount name:nfs-client-provisioner namespace:default roleRef: kind:ClusterRole name:nfs-client-provisioner-runner apiGroup:rbac.authorization.k8s.io --- kind:Role apiVersion:rbac.authorization.k8s.io/v1 metadata: name:leader-locking-nfs-client-provisioner rules: -apiGroups:[""] resources:["endpoints"] verbs:["get","list","watch","create","update","patch"] --- kind:RoleBinding apiVersion:rbac.authorization.k8s.io/v1 metadata: name:leader-locking-nfs-client-provisioner subjects: -kind:ServiceAccount name:nfs-client-provisioner #replacewithnamespacewhereprovisionerisdeployed namespace:default roleRef: kind:Role name:leader-locking-nfs-client-provisioner apiGroup:rbac.authorization.k8s.io

创建deployment.yaml 文件

官方默认的镜像地址,国内可能无法下载,可以使用 image:

fxkjnj/nfs-client-provisioner:latest

#定义NFS 服务器的地址,共享目录名称

root@k8s-master1:pvc#catdeployment.yaml apiVersion:v1 kind:ServiceAccount metadata: name:nfs-client-provisioner --- kind:Deployment apiVersion:apps/v1 metadata: name:nfs-client-provisioner spec: replicas:1 strategy: type:Recreate selector: matchLabels: app:nfs-client-provisioner template: metadata: labels: app:nfs-client-provisioner spec: serviceAccountName:nfs-client-provisioner containers: -name:nfs-client-provisioner image:fxkjnj/nfs-client-provisioner:latest volumeMounts: -name:nfs-client-root mountPath:/persistentvolumes env: -name:PROVISIONER_NAME value:fuseim.pri/ifs -name:NFS_SERVER value:172.16.201.209 -name:NFS_PATH value:/home/nfs volumes: -name:nfs-client-root nfs: server:172.16.201.209 path:/home/nfs

创建class.yaml

root@k8s-master1:pvc#catclass.yaml apiVersion:storage.k8s.io/v1 kind:StorageClass metadata: name:managed-nfs-storage provisioner:fuseim.pri/ifs#orchooseanothername,mustmatchdeployment'senvPROVISIONER_NAME' parameters: archiveOnDelete:"true"

部署

root@k8s-master1:pvc#kubectlapply-f. #查看存储卷 root@k8s-master1:pvc#kubectlgetsc NAMEPROVISIONERRECLAIMPOLICYVOLUMEBINDINGMODEALLOWVOLUMEEXPANSIONAGE managed-nfs-storagefuseim.pri/ifsDeleteImmediatefalse25h

三、编写mysql 相关yaml文件

MySQL 示例部署包含一个 ConfigMap、两个 Service 与一个 StatefulSet。

ConfigMap:

vim mysql-configmap.yaml

apiVersion:v1 kind:ConfigMap metadata: name:mysql labels: app:mysql data: master.cnf:| #Applythisconfigonlyonthemaster. [mysqld] log-bin slave.cnf:| #Applythisconfigonlyonslaves. [mysqld] super-read-only

说明:

在这里,我们定义了 master.cnf 和 slave.cnf 两个 MySQL 的配置文件

- master.cnf 开启了log-bin,可以使用二进制日志文件的方式进行主从复制.

- slave.cnf 开启了 super-read-only ,表示从节点只接受主节点的数据同步的所有写的操作,拒绝其他的写入操作,对于用户来说就是只读的

- master.cnf 和 slave.cnf 已配置文件的形式挂载到容器的目录中

Service:

vim mysql-services.yaml

#HeadlessserviceforstableDNSentriesofStatefulSetmembers. apiVersion:v1 kind:Service metadata: name:mysql labels: app:mysql spec: ports: -name:mysql port:3306 clusterIP:None selector: app:mysql --- #ClientserviceforconnectingtoanyMySQLinstanceforreads. #Forwrites,youmustinsteadconnecttothemaster:mysql-0.mysql. apiVersion:v1 kind:Service metadata: name:mysql-read labels: app:mysql spec: ports: -name:mysql port:3306 selector: app:mysql

说明:

clusterIP: None,使用无头服务 Headless Service(相比普通Service只是将spec.clusterIP定义为None,也就是没有clusterIP,直接使用endport 来通信)来维护Pod网络身份,会为每个Pod分配一个数字编号并且按照编号顺序部署。还需要在StatefulSet添加serviceName: “mysql”字段指定StatefulSet控制器

另外statefulset控制器网络标识,体现在主机名和Pod A记录:

• 主机名:<statefulset名称>-<编号>

例如: mysql-0

• Pod DNS A记录:<statefulset名称-编号>.<service-name> .<namespace>.svc.cluster.local (POD 之间通过DNS A 记录互相通信)

例如:

mysql-0.mysql.default.svc.cluster.local

StatefulSet:

vim mysql-statefulset.yaml

apiVersion:apps/v1

kind:StatefulSet

metadata:

name:mysql

spec:

selector:

matchLabels:

app:mysql

serviceName:mysql

replicas:3

template:

metadata:

labels:

app:mysql

spec:

initContainers:

-name:init-mysql

image:mysql:5.7

command:

-bash

-"-c"

-|

set-ex

#Generatemysqlserver-idfrompodordinalindex.

[[`hostname`=~-([0-9]+)$]]||exit1

ordinal=${BASH_REMATCH[1]}

echo[mysqld]>/mnt/conf.d/server-id.cnf

#Addanoffsettoavoidreservedserver-id=0value.

echoserver-id=$((100+$ordinal))>>/mnt/conf.d/server-id.cnf

#Copyappropriateconf.dfilesfromconfig-maptoemptyDir.

if[[$ordinal-eq0]];then

cp/mnt/config-map/master.cnf/mnt/conf.d/

else

cp/mnt/config-map/slave.cnf/mnt/conf.d/

fi

volumeMounts:

-name:conf

mountPath:/mnt/conf.d

-name:config-map

mountPath:/mnt/config-map

-name:clone-mysql

image:fxkjnj/xtrabackup:1.0

command:

-bash

-"-c"

-|

set-ex

#Skipthecloneifdataalreadyexists.

[[-d/var/lib/mysql/mysql]]&&exit0

#Skipthecloneonmaster(ordinalindex0).

[[`hostname`=~-([0-9]+)$]]||exit1

ordinal=${BASH_REMATCH[1]}

[[$ordinal-eq0]]&&exit0

#Clonedatafrompreviouspeer.

ncat--recv-onlymysql-$(($ordinal-1)).mysql3307|xbstream-x-C/var/lib/mysql

#Preparethebackup.

xtrabackup--prepare--target-dir=/var/lib/mysql

volumeMounts:

-name:data

mountPath:/var/lib/mysql

subPath:mysql

-name:conf

mountPath:/etc/mysql/conf.d

containers:

-name:mysql

image:mysql:5.7

env:

-name:MYSQL_ALLOW_EMPTY_PASSWORD

value:"1"

ports:

-name:mysql

containerPort:3306

volumeMounts:

-name:data

mountPath:/var/lib/mysql

subPath:mysql

-name:conf

mountPath:/etc/mysql/conf.d

resources:

requests:

cpu:500m

memory:1Gi

livenessProbe:

exec:

command:["mysqladmin","ping"]

initialDelaySeconds:30

periodSeconds:10

timeoutSeconds:5

readinessProbe:

exec:

#CheckwecanexecutequeriesoverTCP(skip-networkingisoff).

command:["mysql","-h","127.0.0.1","-e","SELECT1"]

initialDelaySeconds:5

periodSeconds:2

timeoutSeconds:1

-name:xtrabackup

image:fxkjnj/xtrabackup:1.0

ports:

-name:xtrabackup

containerPort:3307

command:

-bash

-"-c"

-|

set-ex

cd/var/lib/mysql

#Determinebinlogpositionofcloneddata,ifany.

if[[-fxtrabackup_slave_info&&"x$(<xtrabackup_slave_info)"!="x"]];then

#XtraBackupalreadygeneratedapartial"CHANGEMASTERTO"query

#becausewe'recloningfromanexistingslave.(Needtoremovethetailingsemicolon!)

catxtrabackup_slave_info|sed-E's/;$//g'>change_master_to.sql.in

#Ignorextrabackup_binlog_infointhiscase(it'suseless).

rm-fxtrabackup_slave_infoxtrabackup_binlog_info

elif[[-fxtrabackup_binlog_info]];then

#We'recloningdirectlyfrommaster.Parsebinlogposition.

[[`catxtrabackup_binlog_info`=~^(.*?)[[:space:]]+(.*?)$]]||exit1

rm-fxtrabackup_binlog_infoxtrabackup_slave_info

echo"CHANGEMASTERTOMASTER_LOG_FILE='${BASH_REMATCH[1]}',\

MASTER_LOG_POS=${BASH_REMATCH[2]}">change_master_to.sql.in

fi

#Checkifweneedtocompleteaclonebystartingreplication.

if[[-fchange_master_to.sql.in]];then

echo"Waitingformysqldtobeready(acceptingconnections)"

untilmysql-h127.0.0.1-e"SELECT1";dosleep1;done

echo"Initializingreplicationfromcloneposition"

mysql-h127.0.0.1\

-e"$(<change_master_to.sql.in),\

MASTER_HOST='mysql-0.mysql',\

MASTER_USER='root',\

MASTER_PASSWORD='',\

MASTER_CONNECT_RETRY=10;\

STARTSLAVE;"||exit1

#Incaseofcontainerrestart,attemptthisat-most-once.

mvchange_master_to.sql.inchange_master_to.sql.orig

fi

#Startaservertosendbackupswhenrequestedbypeers.

execncat--listen--keep-open--send-only--max-conns=13307-c\

"xtrabackup--backup--slave-info--stream=xbstream--host=127.0.0.1--user=root"

volumeMounts:

-name:data

mountPath:/var/lib/mysql

subPath:mysql

-name:conf

mountPath:/etc/mysql/conf.d

resources:

requests:

cpu:100m

memory:100Mi

volumes:

-name:conf

emptyDir:{}

-name:config-map

configMap:

name:mysql

volumeClaimTemplates:

-metadata:

name:data

spec:

storageClassName:"managed-nfs-storage"

accessModes:["ReadWriteOnce"]

resources:

requests:

storage:0.5Gi

说明:

- 使用xtrbackup 工具进行容器初始化数据的备份,https://www.toutiao.com/i6999565563710292484

- 使用linux 自带的ncat 工具进行容器初始化数据的拷贝[使用ncat指令,远程地从前一个节点拷贝数据到本地] https://www.cnblogs.com/chengd/p/7565280.html

- 使用mysql的binlog 主从复制 来保证主从之间的数据一致

- 利用pod的主机名的序号来判断当前节点为主节点还是从节点,再根据对于节点拷贝不同的配置文件到指定位置

- 使用mysqladmin的ping 作为数据库的健康检测方式

- 使用nfs存储的 PV 动态供给(StorageClass),持久化mysql的数据文件

四、部署并测试

root@k8s-master1:~/kubernetes/mysql#ll total24 drwxr-xr-x2rootroot4096Nov316:42./ drwxr-xr-x8rootroot4096Nov313:33../ -rw-r--r--1rootroot278Nov222:15mysql-configmap.yaml -rw-r--r--1rootroot556Nov222:08mysql-services.yaml -rw-r--r--1rootroot5917Nov314:22mysql-statefulset.yaml root@k8s-master1:~/kubernetes/mysql#kubectlapply-f. configmap/mysqlcreate service/mysqlcreate service/mysql-readcreate statefulset.apps/mysqlcreate #动态追踪查看Pod的状态: root@k8s-master1:~/kubernetes/mysql#kubectlgetpods-lapp=mysql--watch NAMEREADYSTATUSRESTARTSAGE mysql-02/2Running03h12m mysql-12/2Running03h11m mysql-22/2Running03h10m

可以看到,StatefulSet 启动成功后,将会有三个 Pod 运行。

接下来,我们可以尝试向这个 MySQL 集群发起请求,执行一些 SQL 操作来验证它是否正常:

kubectlrunmysql-client--image=mysql:5.7-i--rm--restart=Never--\

mysql-hmysql-0.mysql<<EOF

CREATEDATABASEtest;

CREATETABLEtest.messages(messageVARCHAR(250));

INSERTINTOtest.messagesVALUES('hello');

EOF

如上所示,我们通过启动一个容器,使用 MySQL client 执行了创建数据库和表、以及插入数据的操作。需要注意的是,我们连接的 MySQL 的地址必须是 mysql-0.mysql(即:Master 节点的 DNS A 记录, 因为POD 之间通过DNS A 记录互相通信)只有 Master 节点才能处理写操作。

而通过连接 mysql-read 这个 Service,我们就可以用 SQL 进行读操作,如下所示:

kubectlrunmysql-client--image=mysql:5.7-i-t--rm--restart=Never--\ mysql-hmysql-read-e"SELECT*FROMtest.messages" #你应该获得如下输出: Waitingforpoddefault/mysql-clienttoberunning,statusisPending,podready:false +---------+ |message| +---------+ |hello| +---------+ pod"mysql-client"deleted

或者:

root@k8s-master1:~/kubernetes/mysql#kubectlrun-it--rm--image=mysql:5.7--restart=Nevermysql-client--mysql-hmysql-read Ifyoudon'tseeacommandprompt,trypressingenter. WelcometotheMySQLmonitor.Commandsendwith;or\g. YourMySQLconnectionidis7251 Serverversion:5.7.36MySQLCommunityServer(GPL) Copyright(c)2000,2021,Oracleand/oritsaffiliates. OracleisaregisteredtrademarkofOracleCorporationand/orits affiliates.Othernamesmaybetrademarksoftheirrespective owners. Type'help;'or'\h'forhelp.Type'\c'toclearthecurrentinputstatement. mysql>SELECT*FROMtest.messages; +---------+ |message| +---------+ |hello| +---------+ 1rowinset(0.00sec) mysql>

转载请注明:IT运维空间 » 运维技术 » 基于K8S的StatefulSet部署MySQL集群

![[Oracle]复习笔记-SQL部分内容](/zb_users/upload/2023/02/25/20230213095820-63ea09bc55070.jpg)

![[ORACLE]查看SQL绑定变量具体值 查看SQL绑定变量值](https://94ip.com/zb_users/theme/ydconcise/include/random/3.jpg)

发表评论